LenslessMic

Audio Encryption and Authentication via Lensless Computational Imaging -- Official Demo Page.

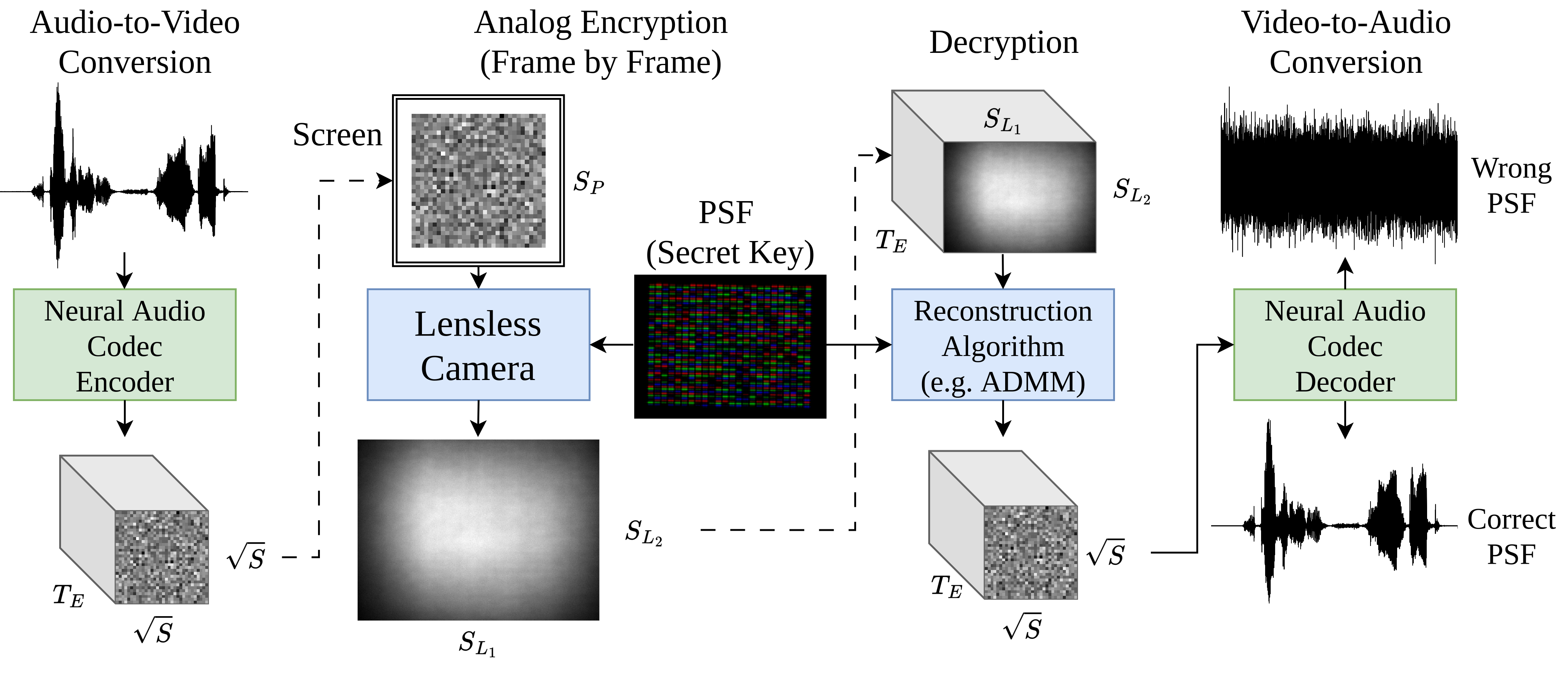

With society’s increasing reliance on digital data sharing, the protection of sensitive information has become critical. Encryption serves as one of the privacy-preserving methods; however, its realization in the audio domain predominantly relies on signal processing or software methods embedded into hardware. In this paper, we introduce LenslessMic, a hybrid optical hardware-based encryption method that utilizes a lensless camera as a physical layer of security applicable to multiple types of audio. We show that LenslessMic enables (1) robust authentication of audio recordings and (2) encryption strength that can rival the search space of 256-bit digital standards, while maintaining high-quality signals and minimal loss of content information. The approach is validated with a low-cost Raspberry Pi prototype and is open-sourced together with datasets to facilitate research in the area.

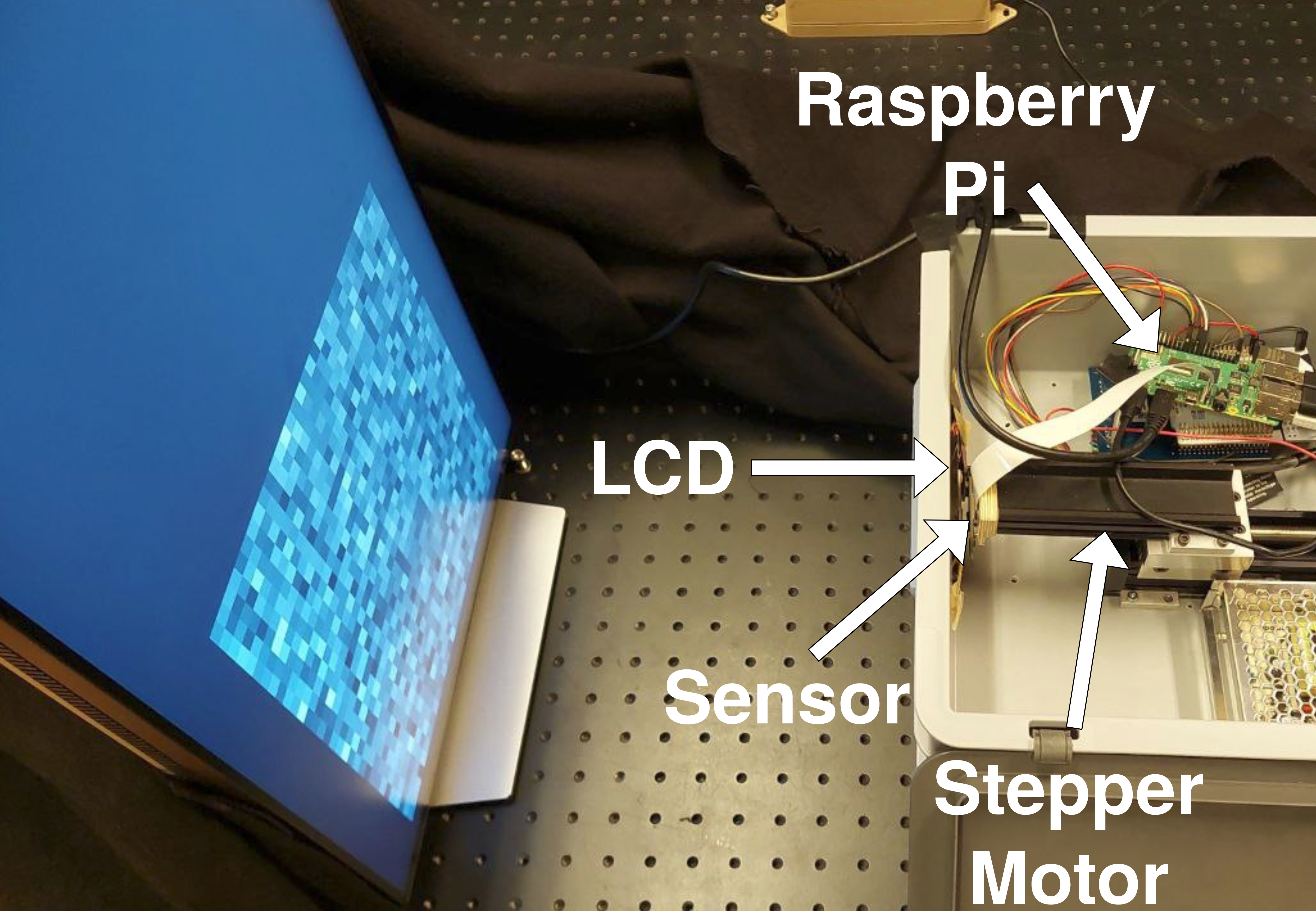

Hardware Setup

Our setup is based on DigiCam. We adapt it to show neural audio codec latent representation. During capture process, we cover the system with a black cloth to prevent external illumination.

Demo Samples

Below we show example audio from the collected Librispeech and SongDescriber datasets for different models: Learned (with different \(g, r\) variations), R-Learned, NoPSF, and ADMM-100.

All models and train/test datasets are published on Huggingface Collection.

- Learned: a trainable reconstruction algorithm based on Unrolled ADMM. Uses DRUNet-based pre/post processors (3.9/4.1M parameters) and psf corrector (128K parameters) to account for noise and model mismatch (8.1M parameters total). Trained on train-clean set.

- R-Learned: the same as Learned but trained on train-random.

- NoPSF: a trainable reconstruction algorithm that uses only (codec representation, lensless measurement) pairs for training and does not use PSF in any way. Based on DRUNet with 8.1M parameters. Trained on train-clean set.

- ADMM-100: vanilla reconstruction algorithm that uses Alternating Direction Method of Multipliers with 100 iterations. Penalty parameters are set to $\mu_1=10^{-4}$, $\mu_2=10^{-4}$, $\mu_3=10^{-4}$ and the weight of the regularization is $\tau=2\cdot 10^{-4}$.

Audio Reconstruction

Below we provide recordings from test-clean set of Librispeech. We recommend listening to the ground-truth and codec versions only after listening to the reconstructions to avoid phonetic-restoration effect, i.e. unintelligible audio may become meaningful after you know what is the actual content of the speech. Methods with \(g \ge 2\) increase LenslessMic robustness to ensure that this effect will not allow hearing speech content from NoPSF reconstructions.

| Method | 237-126133-0018 | 4446-2273-0026 | 5105-28241-0013 | 5142-33396-0062 | 7127-75947-0032 | 8455-210777-0042 |

|---|

Learned and Codec recordings sound very similar, showcasing high quality reconstruction abilities of LenslessMic. Since it may be possible to understand some part of the speech content for NoPSF, we provide LenslessMic variants with improved robustness that operate on grouped frames. The examples are provided below. \(g=3\) case provides the best balance between security robustness and reconstruction quality.

| Method | 237-126133-0018 | 4446-2273-0026 | 5105-28241-0013 | 5142-33396-0062 | 7127-75947-0032 | 8455-210777-0042 |

|---|

We test whether LenslessMic is applicable to other neural audio codecs and collect a dataset with X-codec instead of DAC. The results are presented below. While some utterances sound almost as good as DAC-ones, there are several recordings that have severe reverberation-like or filtering-like effect that decreases intelligibility. In general, LenslessMic is applicable on other codecs and even in cross-codec scenario, however, training on the actual data is required for high-quality reconstruction. R-Learned achieves better quality because it is trained on test-random data and is not overfitted towards any single codec.

| Method | 237-126133-0018 | 4446-2273-0026 | 5105-28241-0013 | 5142-33396-0062 | 7127-75947-0032 | 8455-210777-0042 |

|---|

Besides, LenslessMic works on music data too (we downsampled dataset to 16kHz, so some instruments are a bit distorted):

| Method | 27.599227.6s | 33.1336433.6s | 43.175043.6s | 46.5346.6s | 56.76656.6s |

|---|

Audio Encryption and Authentication

LenslessMic reconstruction results in noise if provided PSF is wrong. This is a core property behind LenslessMic authentication robustness and accuracy. Below you can find examples:

Let \(W \in (0, 1]\) be the ratio of correctly determined pixels of the PSF. If the minimum \(W\) required to get an intelligible audio is \(W \ge \frac{K \log_b 2}{N}\), where \(N\) is the number of pixels in the PSF and \(b\) is the bit-depth, then the LenslessMic system has an encryption strength with a search space equivalent to a secret key of size \(K\). Below we provide audio samples for different \(W\). \(W=7\%\) and \(W=4\%\) correspond to AES-256 and AES-128 search space, respectively (We rounded \(W\) to the next integer, so actually \(W=7\) has even bigger search space of \(K=272\)).

Results for \(g=3\):

| Method | 237-126133-0018 | 4446-2273-0026 | 5105-28241-0013 | 5142-33396-0062 | 7127-75947-0032 | 8455-210777-0042 |

|---|

Results for \(g=2\):

| Method | 237-126133-0018 | 4446-2273-0026 | 5105-28241-0013 | 5142-33396-0062 | 7127-75947-0032 | 8455-210777-0042 |

|---|

Results for \(g=1\) (i.e. standard Learned):

| Method | 237-126133-0018 | 4446-2273-0026 | 5105-28241-0013 | 5142-33396-0062 | 7127-75947-0032 | 8455-210777-0042 |

|---|

We hear that recovering speech content (without phonetic-restoration effect) and speaker identity with \(W=7\%\) is not possible for \(g=1\) too. Besides, the audio still resembles noise. Therefore, the intruder cannot hack the authentication algorithm presented above via a brute-force attack.

\(g=3\) is more robust, however, leads to slightly worse reconstruction quality. Below we show the visualization of how the \(g=3\) reconstruction changes with \(W\) going from \(0\%\) to \(100\%\).

Captured Video Representation

Below we provide the examples of a video representation of audio obtained using DAC, its lensless measurement, and different reconstructions. Original framerate is \(20\) ms for each frame. To see details better, we slow video down to \(100\) ms per frame.

We note that NoPSF often provides smoother frames than Learned and R-Learned that are more sharp. This means that NoPSF is not able to fully recover image from the multiplexing effect of lensless camera. ADMM-100 completely fails to recover frames.

The video below shows how reconstruction of the same frame enhances from \(W=0\%\) to \(W=100\%\). More specifically, we plot \(l_2\)-difference \((\hat{x}-x)^2\) between the reconstructed and codec frames.

Extra Experiments

We provide three additional experiments:

-

In the paper, we noted that the search space for brute-force attack can also be restricted by the RVQ of the codec. With \(C=12\) codebooks of size \(1024\) this leads to \(2^{120}\) possible codebook outputs. This is not an issue because the intruder will need to do it for all \(T_E\) frames, which leads to a search space of \(2^{120T_E}\). However, to be sure, one may fine-tune/train a neural audio codec to have a larger search space. We fine-tuned DAC on the full Librispeech corpus with \(C=13\) codebooks of size \(1024\) (i.e. search space of \(2^{130}\)) and \(16\times16\) latent representation. The decreased size of the latent representation allows to get even higher quality of reconstruction. We collected train-clean and test-clean variants for this codec.

-

We showed that the method is applicable on different types of audio. However, it is also important to generalize to different environments and conditions. Even though DAC itself works on both clean and noisy speech, training only on train-clean can overfit the reconstruction algorithm on clean data. To account for this, we collected a small subset of \(150\) audio files from Librispeech train-other (see Tab 1. in the paper). We fine-tuned Learned (\(g=1\)) system on a mix of train-other and train-clean datasets with constant learning rate of \(2\cdot 10^{-5}\) for \(15\) K steps. We refer to this system as FT-Learned. (Remark: fine-tuning only on train-other leads to drop in performance on clean data, i.e., catastrophic forgetting phenomenon, so we use a mixture).

-

(Only models, no tables). We investigated different loss functions. Our final combination \(\mathcal{L}_{\text{raw}, \text{SSIM}} + \mathcal{L}_{\text{SSIM}} + \mathcal{L}_{\text{MSE}}\) performs the best. Having only \(\mathcal{L}_{\text{MSE}}\) leads to poor convergence and suboptimal quality, showcasing the importance of the structure-based losses. Adding \(\mathcal{L}_{\text{SSIM}}\) to MSE helps a lot, however, having the third term \(\mathcal{L}_{\text{raw, SSIM}}\) further improves the quality. We investigated audio-based losses, such as \(l_1\)-distance between the waveforms or Mel spectrograms of codec and reconstructed audio, however, it had negative effect on the performance. We provide corresponding checkpoints on our Huggingface Collection.

The results are provided below.

| Method | Test Set | g/r | PSNR | SSIM | MSE | ViSQOL | SI-SDR | Mel | STOI | WER | SMA | QM-1/2 |

|---|

We see that dicreased representation size (\(16\times 16\)) allows to improve reconstruction quality in comparison to Learned (\(32\times32\)) on test-clean, while also having a benefit of a bigger search space. For the test-other, we see that both train-clean or train-random are not enough for the reconstruction algorithm to perform on noisy data well. However, adding only small number of train-other samples into the training data enabled FT-Learned to achieve high reconstruction quality on both clean and noisy test sets. Hence, LenslessMic is applicable on noisy data too.

Audio examples for \(16\times16\):

| Method | 237-126133-0018 | 4446-2273-0026 | 5105-28241-0013 | 5142-33396-0062 | 7127-75947-0032 | 8455-210777-0042 |

|---|

Audio examples for test-other:

| Method | 2414-159411-0027 | 3005-163389-0011 | 3528-168669-0015 | 3764-168670-0006 | 4350-9170-0022 | 7902-96595-0024 |

|---|

Finnaly, we show that FT-Learned performs well on test-clean too:

| Method | 237-126133-0018 | 4446-2273-0026 | 5105-28241-0013 | 5142-33396-0062 | 7127-75947-0032 | 8455-210777-0042 |

|---|